Author: rootfs

Looking at AWS Services Pricing Disparity

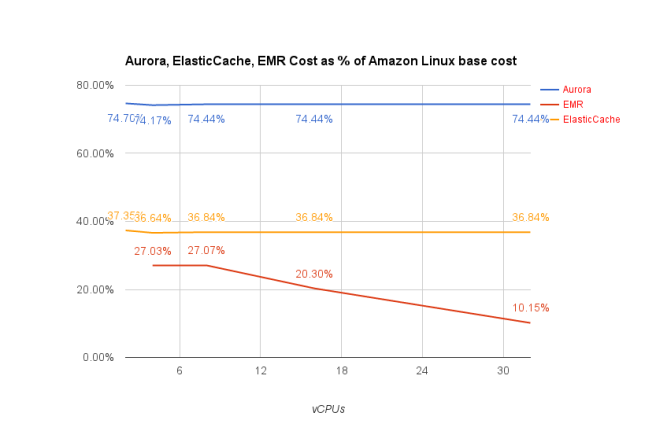

Not every bit is the same on AWS when it comes to AWS services, a quick plot below tells the story.

EMR is mostly based on community based Apache BigTop release with AWS’s own patches. It is unclear why the curve drifting on higher vCPUs counts, especially when considering Hadoop can scales to multi-cores. The margin is noticeably lower than others, probably due to the volume sale effect.

ElasticCache, leveraging Redis and Memcached, appears to be more of an deployment optimized service. AWS mentions nothing more than better usability on ElasticCache documents, so I assume AWS doesn’t change Redis or Memcached. 36% hike is quite something, when compared to less than 20% hike from using a 3rd party OS. The pricing linearity puzzles me, especially when it comes to Memecached, which is known for sub linearity with more threads.

Aurora commands a winning 74% hike. Aurora is AWS’s private MySQL fork, with multiple enhancements, spanning from storage, network, and clustering. Percona has good blogs on Aurora. It appears to me Aurora benefits from internal AWS APIs to build these enhancements and thus is able to beat others that just use EC2 instances.

How Good Is AWS Aurora?

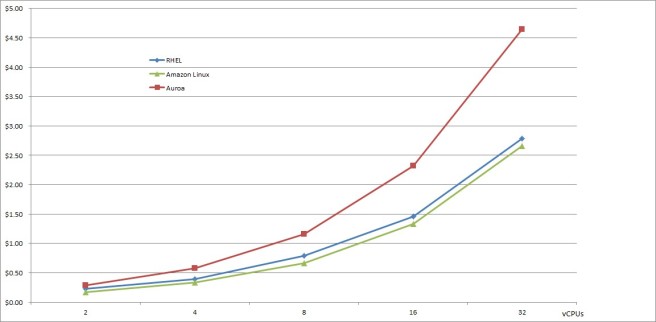

AWS Aurora is a Amazon’s private MySQL fork. It claims 5x performance boost over stock MySQL (though Percona disagrees, their benchmarks suggests 2x).

But what does it really cost, especially you can get MySQL from Linux distributions like RHEL?

I plot the r3 instance pricing curves based on the AWS pricing information. RHEL costs is about 6 cents more than Amazon Linux, yet Aurora cost almost doubles.

The price/performance then returns to 1, YMMV, ouch!

Gluster as Block Storage with qemu-tcmu

In this blog we shall see Terminology and background Our approach Setting up Gluster Setup Tcmu-Runner Qemu and Target Setup iSCSI Initiator Conclusion Similar Topics Terminology and background Glu…

Towards a Universal Storage Control Plane

As time flies, the goals for an universal storage control plane keep evolving.

- libstoragemanagement is a SMI-S based framework. It supports C and Python binding.

- OpenStack Cinder manages block storage on heterogeneous backends. It is mostly used for Virtual environment (Nova), although undercloud could also use some Cinder volumes.

- OpenStack Fuxi is a young project with an ambitious goal of storage management for Containers, VMs, and bare metal machines.

- Dell/EMC projects that are yet widely inter-operable.

- ViPR.

- Rex-Ray and libStorage

- Virtualized backend by presenting a virtualized block storage based on a well formatted image encoding or API. The control plane and data plane are blurred because they are specified by the image format or the API.

- Container engines and orchestrators

- Kubernetes and Docker Volumes support Cloud, virtualized, and NAS: AWS, GCE, OpenStack, Azure, vSphere, Ceph, Gluster, iSCSI, NFS, Fibre Channel, Quobyte, Flocker, etc.

- Storage features such as provisioning, attach/detach, snapshot, quota, resize, protection are either implemented or in road map.

Random Thoughts: Hardened Docker Container, Unikernel and SGX

Recently I read several interesting research and analyst papers on Docker container security.

SCONE: Secure Containers using Intel SGX describes an implementation of putting Container in the secure enclave, without extensive footprint and performance loss.

Hardening Linux Container is a very comprehensive analysis of Containers, virtualization, and underlying technologies. I find it very rewarding to read it twice. So is the other white paper Abusing Privileged and Unprivileged Linux Containers.

So far all these are based on Docker Containers that runs on top of a general purpose OS (Linux). There are limitations and false claims.

What about running an unikernel in SGX? It is entirely possible, per Solo5. And what about the next step to make unikernel, running inside SGX, a new container runtime for Kubernetes, just like Docker, rkt, and hyper?

ContainerCon 2016: Random Notes

There are quite some good talks.

Hypernetes

I wrote about hyper a while back. Now it is full fledged Hypernetes: run Docker image in VM, Neutron enabled, modified Cinder volume plugin. And it is Kubernetes outside! Folks at hyper did lots of hard work.

The talk itself got lots of attention. Dr. Zhang posted his slides. I must admit he did a good job walking through kubernetes in a very short time.

Open SDS

It is a well felt frustration across the industry that look-and-feel of multi-vendor storage is different and management frameworks (e.g. Cinder, Kubernetes and Mesos) have to take pain to abstract a common interface to deal with them.

Open SDS aims to end this by starting a cross vendor collaboration. It is interesting to see Huawei and Dell EMC were standing on the stage together.

QEMU+TCMU

I talked about the opportunities a converged QEMU and TCMU offers. Think about Cinder but without Nova to access storage. While there are efforts to make Cinder (and hypervisor storage) into bare metal and Container, QEMU+TCMU is probably one of the most promising framework.

Brutal ssh attackers experienced

My VM running on one of the public clouds witnessed brutal ssh attack. During its mere 12 days of up time, a grand total of 101,329 failed ssh were logged.

Below is the sample of IPs and their frequencies.

| # of Attacks | IP |

| 10 | 180.101.185.9 |

| 10 | 58.20.125.166 |

| 10 | 82.85.187.101 |

| 11 | 112.83.192.246 |

| 11 | 13.93.146.130 |

| 11 | 40.69.27.198 |

| 14 | 101.4.137.29 |

| 14 | 162.209.75.137 |

| 14 | 61.178.42.242 |

| 19 | 14.170.249.105 |

| 20 | 220.181.167.188 |

| 22 | 185.110.132.201 |

| 23 | 155.94.142.13 |

| 23 | 173.242.121.52 |

| 24 | 155.94.163.14 |

| 25 | 154.16.199.47 |

| 28 | 184.106.69.36 |

| 29 | 185.2.31.10 |

| 29 | 58.213.69.180 |

| 30 | 163.172.201.33 |

| 32 | 185.110.132.89 |

| 37 | 211.144.95.195 |

| 42 | 117.135.131.60 |

| 48 | 91.224.160.106 |

| 60 | 91.224.160.131 |

| 72 | 80.148.4.58 |

| 72 | 91.224.160.108 |

| 73 | 91.201.236.155 |

| 84 | 91.224.160.184 |

| 90 | 222.186.21.36 |

| 146 | 180.97.239.9 |

| 146 | 91.201.236.158 |

| 9465 | 218.65.30.56 |

| 15885 | 116.31.116.18 |

| 17163 | 218.65.30.4 |

| 17164 | 182.100.67.173 |

| 17164 | 218.65.30.152 |

| 23006 | 116.31.116.11 |

Create a VHD blob using Azure Go SDK

There is no good tutorials on how to create a VHD blob that can serve as VM’s Data Disk on Azure. So I write some note on it, based on my recent Kubernetes PR 30091 as part of the effort to support Azure Data Disk dynamic provisioning.

This work uses Azure’s ASM and ARM modes: ARM mode to extract Storage Account name, key, Sku tier, location; ASM mode to list, create, and delete Azure Page Blob.

When an inquiry comes to find an Azure Storage account that has a Sku tier Standard_LRS and location eastus, all Storage accounts are listed. This is accomplished by getStorageAccounts(), which calls ListByResourceGroup() in Azure Go SDK. Each account returns its name, location, and Sku tier. Once a matching account is identified, the access key is retrieved via getStorageAccesskey(), which calls Azure SDK’s ListKeys().

Creating a VHD blob must use the classic Storage API that require account name and access key. createVhdBlob takes the account name and key and a creates a VHD blob in the account’s vhds Container. This uses Azure SDK’s PutPageBlob() method. Once the Page Blob is created, a VHD footer must be created at the end of the blob. This is currently accomplished in my forked go-vhd that is also upstreamed. The VHD footer appends to the blob by calling SDK’s PutPage() method.

Now the Page Blob is created, it can be used as Azure VM’s Data Disk.

A prototype data disk dynamic provisioning on Kubernetes can be found at my branch . A quick demo is as the following.

First create a Storage Class

kind: StorageClass apiVersion: extensions/v1beta1 metadata: name: slow provisioner: kubernetes.io/azure-disk parameters: skuName: Standard_LRS location: eastus

Then create a Persistent Volume Claim like this

Once created, it should be like

# _output/bin/kubectl describe pvc Name: claim1 Namespace: default Status: Bound Volume: pvc-37ff3ec3-5ceb-11e6-88a3-000d3a12e034 Labels: <none> Capacity: 3Gi Access Modes: RWO No events.

Create a Pod that uses the claim:

apiVersion: v1

kind: ReplicationController

metadata:

name: nfs-server

spec:

replicas: 1

selector:

role: nfs-server

template:

metadata:

labels:

role: nfs-server

spec:

containers:

- name: nfs-server

image: nginx

volumeMounts:

- mountPath: /exports

name: mypvc

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: claim1

The Pod should run with the dynamically provisioned Data Disk.

Azure Block Storage Comming to Kubernetes

Microsoft Azure offers file (SMB) and block (vhd data disk ). Kubernetes already supports Azure file storage. Latest Azure becomes the latest cloud provider in Kubernetes 1.4. As a follow-up, development Azure block storage support also started. A preliminary release is at https://github.com/kubernetes/kubernetes/pull/29836

Here is a quick tutorial to use data disk in a Pod.

First, create a cloud config file (e.g. /etc/cloud.conf) and fill your Azure credentials in the following format:

{

"aadClientID" :

"aadClientSecret" :

"subscriptionID" :

"tenantID" :

"resourceGroup":

}

Use this cloud conf file and tell Kubernetes apiserver, controller-manager, and kubelet to use it by adding option –cloud-config=/etc/cloud.conf

Second, login to your Azure portal and create some VHDs. This step is not needed once dynamic provisioning is supported.

Then get the VHDs’ name and URI and use them in your Pod like the following

apiVersion: v1

kind: ReplicationController

metadata:

name: nfs-web

spec:

replicas: 1

template:

metadata:

labels:

role: web-frontend

spec:

containers:

- name: web

image: nginx

volumeMounts:

- name: disk1

mountPath: &amp;amp;quot;/usr/share/nginx/html&amp;amp;quot;

- name: disk2

mountPath: &amp;amp;quot;/mnt&amp;amp;quot;

volumes:

- name: disk1

azureDisk:

diskName: test7.vhd

diskURI: https://openshiftstoragede1802.blob.core.windows.net/vhds/test7.vhd

- name: disk2

azureDisk:

diskName: test8.vhd

diskURI: https://openshiftstoragede1802.blob.core.windows.net/vhds/test8.vhd

Once the Pod is created, you should expect the similar mount output like the following

/dev/sdd on /var/lib/kubelet/plugins/kubernetes.io/azure-disk/mounts/test7.vhd type ext4 (rw,relatime,seclabel,data=ordered) /dev/sdd on /var/lib/kubelet/pods/1ddf7491-57f9-11e6-94cd-000d3a12e034/volumes/kubernetes.io~azure-disk/disk1 type ext4 (rw,relatime,seclabel,data=ordered) /dev/sdc on /var/lib/kubelet/plugins/kubernetes.io/azure-disk/mounts/test8.vhd type ext4 (rw,relatime,seclabel,data=ordered) /dev/sdc on /var/lib/kubelet/pods/1ddf7491-57f9-11e6-94cd-000d3a12e034/volumes/kubernetes.io~azure-disk/disk2 type ext4 (rw,relatime,seclabel,data=ordered)